What direction will front-end engineering on the web go? How will applications be built and delivered in the near future?

The web started off as a way to link documents together, as an implementation of hypertext. Hypertext originated as an idea for how to help humans organize their thoughts as outlined in As We May Think, back in 1945. But it didn’t stay just a way of organizing human thought.

In May 1995, Ben Slivka at Microsoft was working on Internet Explorer, and in his memo, The Web is the Next Platform, he correctly saw the internet as an application delivery platform. It didn’t take much longer for Bill Gates to come around.

Once we got use to that idea, a slew of web 2.0 applications appeared in the mid-2000s, such as Del.icio.us, Flickr, and WordPress. The application was delivered on every request, due to the stateless nature of the web. That’s why when you used the web back then, the entire page would flicker, refresh, and reload.

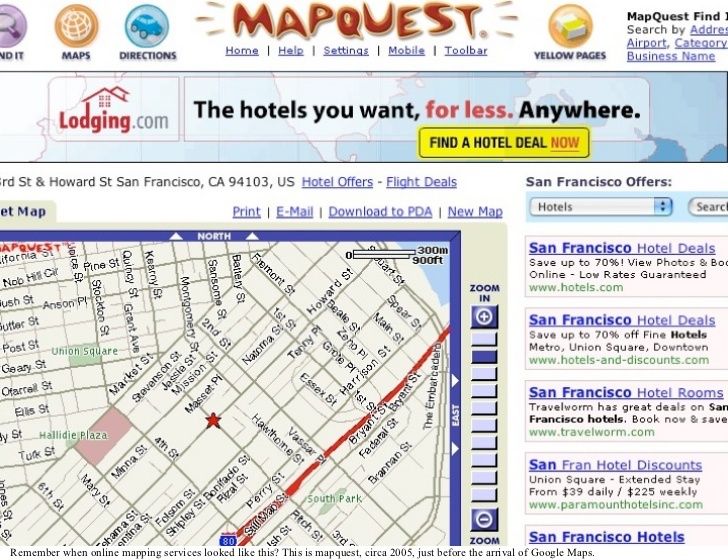

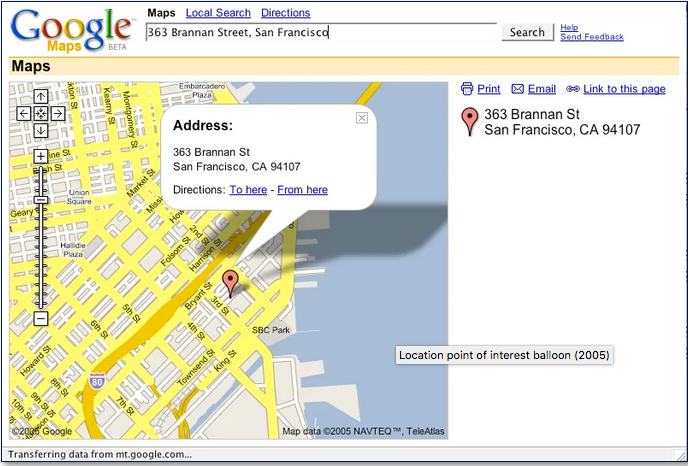

Then around 2005, web devs started leveraging a then-little-known piece of technology called XmlHttpRequest from the mid-90’s to dynamically update a web page. Coined as AJAX, it was a way to request a partial piece of data from the server, and update only part of the web page. The application of this technology was its most stark was when Google Maps first came out as competition against the then-dominate Mapquest. Mapquest required users to click the thin blue bar to move in the ordinal directions to see more of the map.

By contrast, Google Maps lets you drag the map around to navigate, and it would dynamically load parts of the map coming into view. I remember being blown away when I first used Google Maps.

It was also around this time that John Resig started working on jQuery. Frontend engineering started to diverge from backend from this point. It’s never looked back in an explosion of front-end frameworks, from Backbone, Knockout, and Ember to React, Vue.js, Svelte, and Next.js.

Frontend devs are always anxious about whether they’re hitched on the right wagon. I don’t have a crystal ball, but I see a couple interesting things on the horizon.

First on my radar is Deno, a program to run javascript programs–a runtime. Typically, we use the browser to download and run javascript programs every time we visit a URL. Deno strips out all the other things that a browser does, and just focuses on the execution paradigm of a browser: executing javascript programs downloaded from the web in a sandboxed environment.

Deno will always be distributed as a single executable. Given a URL to a Deno program, it is runnable with nothing more than the ~15 megabyte zipped executable. Deno explicitly takes on the role of both runtime and package manager. It uses a standard browser-compatible protocol for loading modules: URLs.Introduction to Deno

Therefore, you can still deliver web apps, but through a 15 MB executable instead of through a multi-gigabyte browser. It frees us from relying on NPM, a private company, for our public developer libraries. Taken a step further, you could conceivably deploy javascript libraries on IPFS or Filecoin, to ensure the it’s always available without relying on a private company. Deno has the makings of changing how applications are distributed.

Second on my radar is Webassembly (WASM). Most web applications are not compiled. They’re interpreted and executed on the fly as they’re downloaded and read by the browser. That’s why Javascript dominates web application frontends–browsers only interpret and execute javascript.

But what if the browser had a common binary format as a compile target? That means you can now leverage any programming language and deploy it to the web, and browsers could run the application.

Right now, I see the most activity with WASM in the Rust programming language ecosystem. Rust programmers are doing some amazing things with WASM. Makepad is an IDE that blew me away the same way Google Maps blew me away 15 years ago. First, it supports live-code editing, where changes to the code changes are immediately reflected in the program. Second, the UI is butter smooth. Try hitting the “Alt/Option” button and see the details of the code shrink so you get a sense of the overall code structure. Lastly, it supports a VR mode, so you can code collaboratively with other people with presence.

Rik Arends, the person behind Makepad had this to say:

The reason game-engines look so much faster than web-browsers is because the W3C specs force browser implementations to be slow. Covering the edgecases quickly gums up any attempt to be fast. In a game engine you simply don’t do the expensive thing because its slow.https://twitter.com/rikarends/status/1327190734106144768

Which transitions to the last thing on my radar: Godot, a game engine/IDE that can compile to WASM. Normally, game devs and web devs are worlds apart. The tools, ecosystem, target customers are all different, so there’s not much cross-pollination. However, now that WASM is a compile target, people have started experimenting with building entire web applications in Godot and deploy it as WASM.

With the success of applications like Figma, I think we’ll see more and more web applications that leverage WASM to do what we didn’t think was possible before, and none of this will be done with the current frontend stack. Whether it will be done with Godot or something else remains to be seen.

A common theme I see here is the disintermediation of parts of the browser, in order to change how distribution works. With a change in distribution comes a change in possible business models. Will React and other frontend frameworks go away? No. For many applications, it will be good enough. But for any web application that have harder demands to wow users and create unique experiences will increasingly be done through WASM.